| Jackie Zhong (A paper written under the guidance of Prof. Raj Jain) |

Download |

A Peer-to-Peer (P2P) network is a self-organized network of peers that share computing resources without the coordination of a central server. The decentralized nature of P2P networking makes its applications fault-tolerant and easy to scale. In the 1990s and early 2000s, the popularity of P2P networking, led by P2P file-sharing applications such as BitTorrent, reached its peak. Since then, the Internet moved towards a more centralized model and interest in P2P networking declined both in the general public and in academia. This paper examines the history of P2P network to answer the question of why P2P networking is useful. Furthermore, by analyzing recent P2P applications and comparing them to newly emerged networking concepts, this paper seeks to provide insights into the decline of P2P networking in the recent decade as well as its potentials in the future.

The Internet today is probably the largest project human being has ever accomplished, with more than 18 billion connected devices

[CISCO20]

and an estimate of 1.145 trillion MB of data generated per day [Bulao21] . Most of the data is being processed and

stored in hyper-scale data

centers which have capacity on the scale of thousands of TB [Arman16]. In the history of the Internet, however, there have been envisions

and applications of an alternative, decentralized model which has gone through different phases of development in the past decades. The

decentralized network, also commonly known as P2P network, does not require huge data centers. Instead, information is being distributed

among members in the network. By doing so, P2P network avoids having a single point of failure and can scale easily as more devices join

the network.

P2P network was once highly popular around the start of the new century with many different P2P file sharing applications being developed and commercialized.

However, as

new networking technologies and applications continued to emerge, business, academia, and individuals gradually became to favor more

centralized network models which are much cheaper to implement than before and easier to manage. Even so, the decentralized nature of P2P

network still makes it relevant in applications that do not want central control (e.g. cryptocurrency) and a single point of failure.

Internet from the last century looked very different from Internet today. There were few hosts on the regional networks, which were primarily used in research and military. Even so, there had been envisions for future network whose members can communicate point-to-point and have a separation of function (client and server). With the development of TCP/IP and World Wide Web in the 1980s and 1990s, Internet finally became a thing to the general public and began its rapid development.

ARPANET (Advanced Research Projects Agency Network), the predecessor of Internet, was launched in the 1960s for the purpose of decentralization

of information. As the Cold War was heating up, the U.S. Department of Defense was thinking about ways to avoid a single point of failure and

to distribute information even after a nuclear attack [Georgia21]. In 1969, the same year APARNET was launched, Steve Crocker at UCLA

(where one of the first four APARNET hosts is located) wrote RFC (Request for Comments) 1, in which he used the term "host-to-host" to

characterize ARPANET connection [RFC1]. Such an idea is regarded as an early envision to P2P networking.

A few months later in RFC 5, Jeff Rulifson characterized host machines in ARPANET that process packets and send messages as "server-host",

and others that receive and display messages as "client-host" [RFC5]. Though still subtle back then, such a separation of

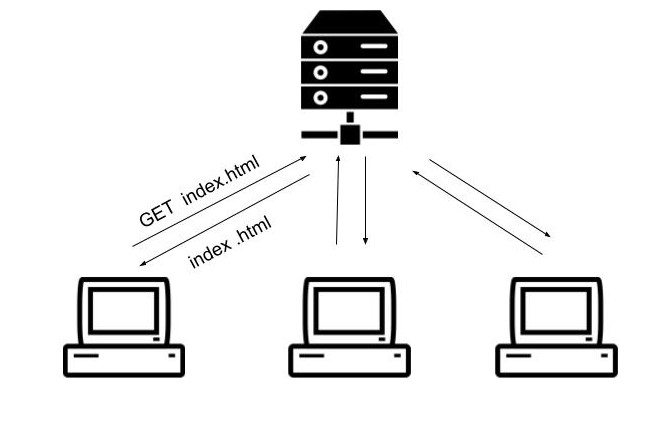

function between network members hints for a modern client-server model (Fig. 1) as opposed to the P2P model (Fig. 2) implied in RFC 1.

In a client-server model, each member has a clear separation of roles. Members known as servers manage resources, receive requests, and

deliver services. Other members known as clients send requests to the servers and receive services.

Note that a client or server is more

of an abstract entity based on actions performed and does not necessarily depend on the underlying physical devices (while a client-host

or server-host does refer to actual machines). For example, a modern general-purpose computer (e.g., a PC) has the ability to act as both

client and server. And a server can be a piece of distributed software running on multiple devices which could be geographically separated

and connected via a network.

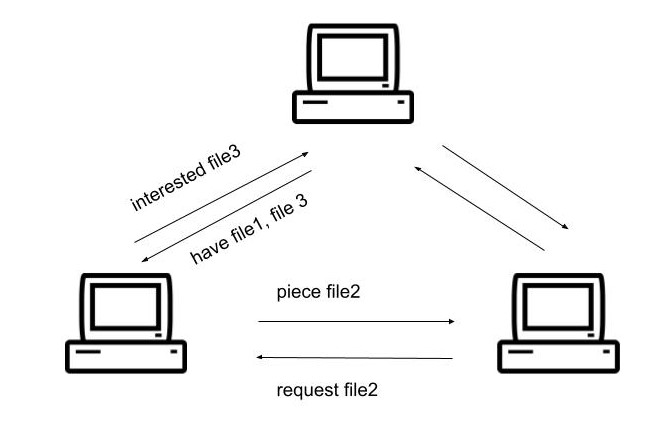

On the contrary, each member in a P2P network, known as a peer, plays the roles of both client and server. Peers self-organize themselves in the absence of a central server. For example, in a P2P file-sharing network, resources are not stored in a server but are (potentially split and/or duplicated) distributed in each peer. A peer can both act like a client by requesting for a piece of data (e.g. an image) from another peer and act like a server by responding to the requests from other peers.

In the 1970s, Robert Kahn and Vinton Cerf developed and tested TCP/IP (Transfer Control Protocol/Internetwork Protocol), which standardized

communication between machines on different networks. On January 1, 1983, ARPANET officially changes to the TCP/IP protocol, marking the birth

of the Internet [Georgia21].

Though Internet has paved the road for machines that are geographically separated to exchange data by using a standarlized proctocol,

information on the Internet was still rather unorganized, distributed, and hard to access. As a result, the Internet was still mainly

used in research, the military, and some business. In 1989, CERN (European Organization for Nuclear Research) employee Tim Bernes-Lee

proposes a system of sharing and management over a network by associating documents with network addresses and using hyperlinks to tie

them together [Grossman18]. This proposal known as the World Wide Web initiative shapes how information is organized in today's network

by implementing some of its basic components including

HTTP (Hypertext Transfer Protocol) and HTML (HyperText Markup Language). In 1991, the first web server was built and in 1993, CERN put the

web into the public domain, marking the birth of the World Wide Web [Grossman18].

The World Wide Web is arguably the first significant implementation of a client-server model, with the web server processing HTTP requests

and sending HTML files to the clients. Considering the initial purpose of launching the Web is to provide a more stable and efficient way to

locate and manage information by associating it with an identifier (i.e., which file at which address/machine), the client-server

model does seem like a reasonable approach. More discussions on the web service model will come in section 4.

As the Internet and PC industry quickly expanded in the general public in the 1990s, needs for contents, especially multimedia contents, on the Internet surged. While using centralized servers to store and distribute multimedia contents was too expensive back then due to limitation on network bandwidth and computer storage, P2P applications emerged as an alternative by distributing data among peers in the network. Other P2P applications likes direct voicing application were also developed during this era.

As illustrated in section 2.2, in a P2P network each peer needs the power to request as well as to contribute computing resources (store data,

send data over network, etc.), and the more peers there are, the higher the network capacity is. Therefore, the rapid expansion of Internet and

Personal Computer (PC) in the general public in the 1990s satisfied the preconditions of P2P networking. The real incentive for P2P networking,

however, is the lack of central repository for multimedia content, especially MP3, that became more and more popular on the Internet in the

1990s [Bonderud19].

The reasons behind the lack of central server for multimedia content involve not only technical but also practical and business considerations.

An undisputed fact is, however, implementing such server back then was much harder with uncompressed, larger file (average song around 42 MB),

poor bandwidth (majority of users with 56 kbps dial-up connections as of 2000), and more expensive storage [Bonderud19]. As a result, Napster, a P2P

file-sharing application focuses on MP3 files originally released in 1999, which offered a wide range of music to be shared and downloaded,

no doubt quickly became popular by attracting more 80 million users in two years [Bonderud19].

After Napster struck first, other P2P file-sharing applications quickly emerged in the early 2000s, with BitTorrent, a P2P application

layer protocol running on top of TCP/UDP, being the most popular. Both Napster and BitTorrent used a hybrid model to construct a big index

for files while the actual files are distributed in each peer.

The hybrid model does consist a central server known as a "tracker" that does not host any files, but assists a user to connect to other

peers sharing the files using metadata of files [Cohen17]. While the actual files are, possibly split into chunks if large, stored

in users' computers, which the file-sharing software runs on to query tracker for where files are located, connect to peers to ask for a

file, and download a file from peer(s). Note that the tracker is not a required feature, as the metadata of files can be distributed as well,

which would make the network completely decentralized. However, having a tracker does make querying file locations more convenient and faster.

To briefly illustrate how peers in P2P file-sharing network communicates with each other and organize themselves, Table 1 introduces

some BitTorrent message types involved in file transcaction.

| Message type (indicated by a single byte in the packet) | Functionality |

|---|---|

| interested | Ask for connection to a peer in order to see what it owns. |

| have | Indicate the files (hash-encoded) a peer owns. |

| request | Request to download a file owned by another peer. |

| cancel | Last message in a file transaction to indicate the end. |

BitTorrent, like many other P2P applications, concerns with more than just how peers communicate. It implements a reasonable system to ensure file integrity, a bartering system (users upload files to others while they download file) to prevent freeriding [Pouwelse05], and etc. However, what really distinguishes P2P file-sharing applications from a centralized server network is its self-automation. Each peer comes into and leaves the network as they want (by running/closing the software), providing on-demand bandwidth and storage. Information exists in a dynamic manner circulating the network, with copies of it being made (a peer uploads it) and destroyed (a peer owning it leaves the network). Such a feature enabled decentralized network to hold an amount of information that centralized servers failed to do so around 2000.

Though P2P file-sharing applications account for a vast majority of P2P traffic in the 2000s [Karagiannis05], it is not the only P2P

applications. Skype (launched in 2003) implements a P2P network that allow users to send voice data and messages directly to each other.

For direct voicing and messaging applications, it is arguable that the P2P model is a very natural approach, as data is being

generated from a peer and intended for another peer. However, in a real-life application like Skype, the issue is far more complicated.

Peers in a network can have limited bandwidth and computing power, or simply lacks for a low-latency direct connection to another depending on

the topologies of network. Skype network therefore elects super nodes which has public IP addresses, enough bandwidth and memory,

etc. to act as the end points for ordinary peers, as illustrated in Fig. 3. Such feature makes communications in Skype network not completely point-to-point. However,

the network still mostly

preserves the decentralized nature as there is no centralized server (except for the login server) and user information is still stored

and distributed in a decentralized manner like that in a P2P file-sharing network [Baset04] .

With the expansion of PC industry and Internet in the 1990s, the general public had excessive computer storage and bandwitdh to store and share contents, which satisfied the preconditions for a P2P network. On the otherhand, limitations of computer storage and network bandwidth then obstructed the development of powerful, centralized servers. As a result, P2P applications, led by file-sharing applications, flourished at the time around 2000.

The development of processors, storage, and networking technologies in the recent decade led to new networking ideas like cloud computing and Internet of Things. On the other hand, P2P networking did not keep up with the time as its traffic volume and interest in it kept decreasing. Development of P2P networking is challenged by its current design with issues in availability, management, security, and performance.

The hype for P2P networking in the 2000s did not last too long as the Internet applications became more diverse and new networking technologies quickly emerged. Though there is no consistent tracking on the P2P traffic volume, reports from different sources since late 2000s can give a glimpse in the declining P2P traffic, especially in the North America as illustrated in Table 2.

| Year | Comments | Source |

|---|---|---|

| 2004 | P2P traffic accounts for 62% of Internet traffic worldwide, 33% in the US. | [Sar06] |

| 2007 | Web traffic overtaken P2P traffic, largely due to the rise of YouTube. | [Bailey17] |

| 2011 | P2P traffic accounts for 19% of North America Internet traffic, beaten by Netflix alone during peak hours. | [Singel11] |

| 2013 | P2P traffic accounts for 7.39% Internet traffic in the US, drop not only in percentage but in actual traffic volume. | [Kraw13] |

| 2015 | P2P traffic estimated to be 3% in the US, on par with Hulu, the fifth biggest video streaming platform then. | [Smith16] |

A caveat here is the way that P2P traffic is being measured. The usage of VPN, use of unconventional port like 80 (usually for HTTP)

by P2P protocol [Karagiannis05], and other practices can all make P2P traffic hard to discover. Other measurements, however, clearly

indicates the decline of interest in P2P network both in general public and academia.

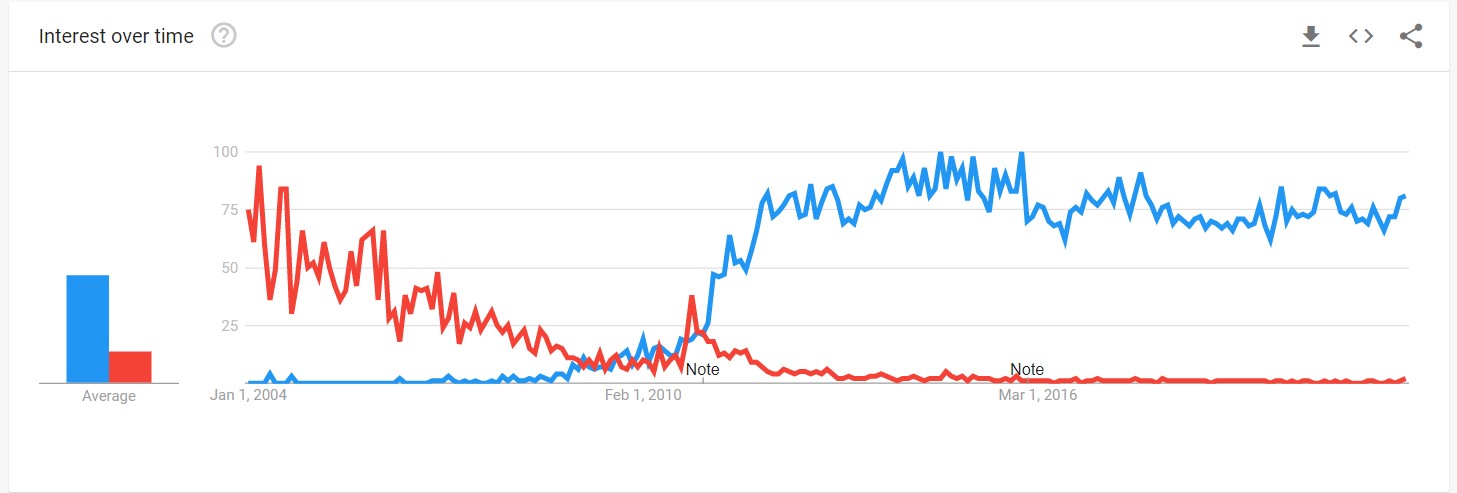

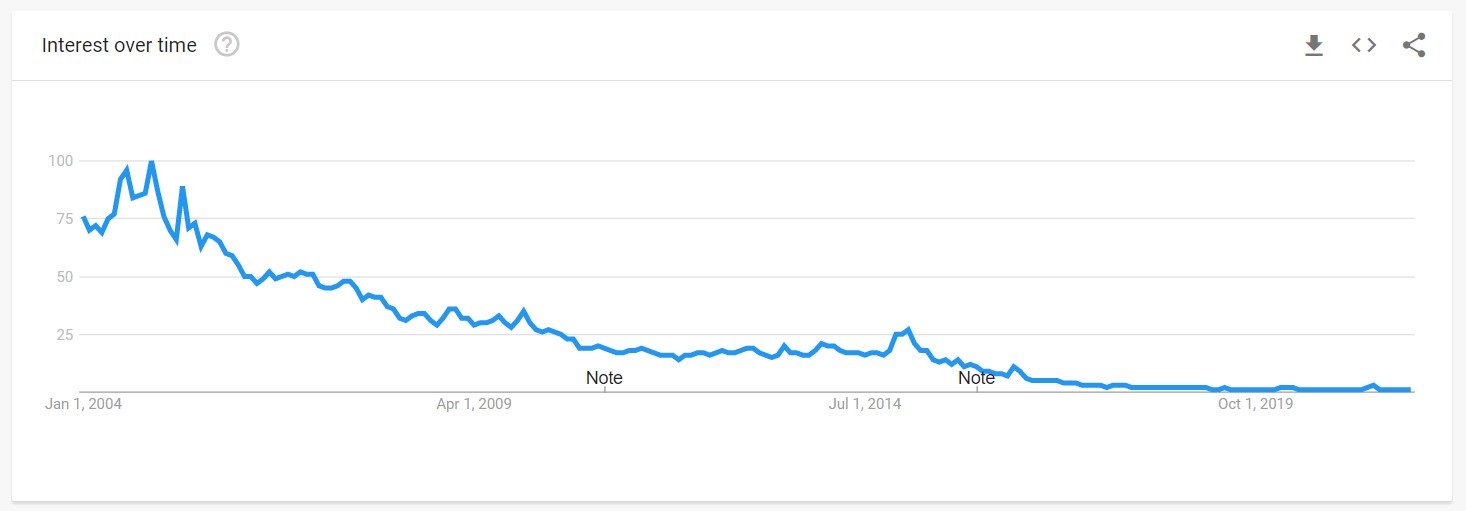

Google Trends is a website of Google that analyzes the queries using Google's search engine. Considering Google's popularity in most

regions in the world, it should be a good indicator of people's interest in a topic. From Fig. 4 and Fig. 5, it can be observed that

interest in P2P file sharing does seem to correlate with decreasing P2P traffic. Both P2P sharing as a general search term and BitTorrent

as a specific P2P protocol has a very clear trend of decreasing interest over the same period of time (2004 - present). As a comparison,

newly emerged technology, like cloud storage as illustrated in Fig. 3, went through a rising trend and is now stable.

In the academic realm, there also seems to be less interest in P2P networking, with decreasing research papers on P2P and the cancellation

of the International Workshop on P2P systems (IPTPS), an event that has been held since 2002, in 2015 [Kisembe17].

An important thing to note is before 2013, P2P traffic volume is still increasing or at least at the same level. Its decreasing share in the

overall Internet traffic is mainly due to the rise of other traffic, mainly web traffic. It wasn't until 2013 when actual decrease in

P2P traffic volume happened. Therefore, the question is not quite why P2P networking suddenly became unpopular, but rather what P2P networking failed

to do to keep up with the novel Internet applications.

Entering the new century, Moore's law still keeps proving those who had doubted it wrong. The size of transistors continues to decrease,

resulting in faster processors and bigger storage capacity. The decreasing size and cost of the hardware generates smaller but more

powerful computing devices [COST21]. Such development, together with novel networking technologies, also increases the network bandwidth and

lowers the latency with more powerful routers and switches.

The increased capacity of computing resources along with new standalization (e.g., Open Computer Project in 2011) fueled the development

of data centers that are more powerful and scalable than before. Providers now have spare computing resources to rent out on demand,

giving the rise to cloud computing (marked by Amazon releasing EC2 in 2006).

Arguably the biggest incentive for P2P networking is to utilize the spare computing resources on PCs and other small

(as compared to a server) devices. However, the rise of data centers and cloud computing diminished the

advantages and exposed the disadvantages of P2P networking in the following manners:

Higher Internet bandwidth and lower latency also fuels the development of real-time applications such as video streaming,

video calling, and multi-player gaming. Unlike old, traditional web applications that can be asynchronous (e.g., waiting a

few more seconds for a web page is not the end of the world), these real-time applications require fast (on the scale of millisecond), stable delivery of information.

The availability and performance issues of P2P networking obstructs its development for real-time applications. For applications

whose users need to have a consistent view of the state, for example what the other users have typed in a Google Doc and where the other players

are in a game, the P2P network needs to do extra work in synchronizing as each user needs all others to know its information. In contrast,

in a client-server model only the central server keeps the information, updates it as needed, and distributes it to all users. In fact, operations in P2P network generally have more overheads due

to peers exchanging control messages with all others. This can lead to performance issues given limited computing resources,

and there also have been reports on Internet service providers (ISPs) throttling P2P traffic due to its high bandwidth use [Roettgers08] .

In addition to the overheads, the availability issue of P2P network also poses concerns with consistent content delivery. Unlike

a client-server architecture, a P2P network is highly dynamic, with peers having different bandwidth and joining and leaving the

network constantly [Kumb11]. For applications like video streaming, this can be an issue as extra work has to be done to make

sure the content is delivered consistently as peers that are uploading the content can leave at any time.

Another rising networking topic in the recent decade is IoT (internet of things). IoT consists of small "things" that are not traditional

computers (e.g., laptop, desktop), but has the capacity to collect information and connect to the Internet, such as smart watches, smart fridges, and etc.

To minimize size and cost or to satisfy physical constraints (e.g. has to fit in a sensor), many IoT devices are meant to be small with

limited storage and computing capacity. Therefore, a P2P network model that has a flat network topology does not seem appropriate.

Consider MQTT, one of the most popular IoT protocols. IoT devices only need to send a message to the central publishers, who are

responsible for delivering the message to potentially a lot of subscribers that are interested in what the IoT devices say. If instead

the small IoT device needs to directly send messages to all its subscribers, it has to bear the extra burden of keeping track of all the

subscribers and sending extra messages over the Internet. Given the limited computing resources of a small IoT devices, such P2P

connection model may not be cost-efficient in many cases.

As new networking applications like cloud computing and video streaming are being developed in the recent decades, P2P networking was limited by its performance issues (overheads in communication), availability issues (peers joining and leaving randomly), and security and management issues. As a result, P2P networking failed to apply to many new applications, and has been on a declining trend ever since the mid 2000s.

The need for networks that are easier to manage and have better availability has led to the dominance of the client-server model over the P2P model. The decentralized feature of P2P, however, destined that there is never a full replacement of it. Despite the current odds against it, P2P networking may still flourish in applications that are limited by centralization.

A CDN (content delivery network) is a centralized network model commonly used in video streaming that consists of geographically distributed data centers and proxy servers in order to deliver lower-latency service. Even with more advanced network virtualization technologies, building a high-coverage CDN network that consistently delivers low-latency service is no easy task. There have been proposals for adding P2P feature to the edge of CDN to form a CDN-PEP network. In a hybrid CDN-P2P network, the user in a region reports to a CDN node (e.g., a proxy server), but instead of always getting content from the central server, the user may get content from another user streaming the same content in the same mesh managed by a CDN node for lower latency and less redundancy [Xu06].

Cryptocurrency like Bitcoin has quickly gained a lot of popularity in the past years due to its safety (cannot be destroyed as long as the Internet still exists). Bitcoin is not stored in a central server, but is duplicated and distributed in a system of records. The transaction information of Bitcoin is verified by devices (known as miners) in the network and then packaged into blocks and chained together. Anybody can download the complete transaction record (stored in Blockchain) from the Internet. The more devices verifying and stroring the transactions there are, the more unlikely it is to cheat as the workload involved in hacking millions of devices or controlling a majority (> 51%) of miners to change the information is not cost-effective or near impossible. And as an award for members in the network to do work in verifying transaction, they get a chance to get cryptocurrency (Proof of Work). Bitcoin uses many other important technology such as cryptography and Blockchain, but it is generally considered to be a P2P network due to its lack of a central coordinator (unlike a bank system) and decentralized nature.

As of now, the Internet is largely a centralized model, with most data being stored and processed in datacenters. Admittedly, the centralized model has its advantages of easy-to-manage and highly available. However, even with novel technologies and standards, deploying central servers that have high geographic coverage can still be expensive. Therefore, a hybid model that implements P2P network on its edge may still be useful. In addition, for applications that oppose for a central controller like cryptocurrency, P2P network is definitely the appropriate model.

Nowadays, information is centralized not only in the way they are stored (in datacenters), but also in the way they are

controlled and delivered with concepts like SDN (software-defined networking) that separates the control plane from routers and

unifies them into an easy-to-manage system with centralized software control. Fueled by the development of computing

and networking technologies as section 4 points out, the centralization gave rise to applications that are cheaper, faster,

more capable, and more scalable, thereby outcasting the once-popular P2P model which is harder to manage and involves

a lot of overheads.

However, centralized Internet still has issues with single-point of failure and trust for its owner (e.g., companies

selling or leveraging on information stored in their servers). By examining the history of the Internet, it is clear that

the popularity of different network models has shifted as a result of novel concepts and technologies. Therefore, it is still

important to study the features of a P2P network, whose decentralized nature makes it hard to be fully replaced, and still

may get promoted by future technologies.

| [CISCO20] | "Cisco Annual Internet Report (2018 -2023) White Paper". Retrived 14 November 2021, https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.html |

| [Bulao21] | Jacquelyn Bulao, "How Much Data is Created Every Day in 2021?", 2021, https://techjury.net/blog/how-much-data-is-created-every-day/#gref |

| [Arman16] | S. Arman. S. Sarah, S. Dale, and 7 other, "United States Data Center Energy Usage Report", 2016, https://www.researchgate.net/publication/305400181_United_States_Data_Center_Energy_Usage_Report/citation/download |

| [RFC5] | Jeff Rulifson, "Request for Comments 5", 1969, https://datatracker.ietf.org/doc/html/rfc5 |

| [RFC1] | Steve Crocker, "Host Software", 1969, https://datatracker.ietf.org/doc/html/rfc1 |

| [Georgia21] | University System of Georgia's Online Library, "A Brief History of Internet". Retrieved 16 November 2021, https://www.usg.edu/galileo/skills/unit07/internet07_02.phtml#:~:text=January%201%2C%201983%20is%20considered,to%20communicate%20with%20each%20other. |

| [Grossman18] | David Grossman, "On This Day 25 Years Ago, the Web Became Public Domain", 2018, https://www.popularmechanics.com/culture/web/a20104417/www-public-domain/ |

| [Bonderud19] | Doug Bonderud, "Technology in the 2000s: Of Codecs and Copyrights", 2019 https://now.northropgrumman.com/technology-in-the-2000s-of-codecs-and-copyrights/ |

| [Cohen17] | Bram Cohen, "The BitTorrent Protocol Specification", last-modified Feburary 2017. Retrieved November 2021, https://www.bittorrent.org/beps/bep_0003.html |

| [Pouwelse05] | J.A. Pouwelse, P. Garbacki, D.H.J. Epema, H.J. Sips, "The BitTorrent P2P File-Sharing System: Measurements and Analysis", Peer-to-Peer Systems IV. IPTPS, 2005, pp 205-216, https://pdos.csail.mit.edu/6.824/papers/pouwelse-btmeasure.pdf |

| [Karagiannis05] | T. Karagiannis, A. Broido, N, Brownlee, kc claffy, M. Faloutsos, "Is P2P dying or just hiding?", 2005 https://courses.cs.duke.edu/cps182s/spring05/readings/p2p-dying.pdf |

| [Baset04] | S.A. Baset, H.G. Schulzrinne, "An Analysis of the Skype Peer-to-Peer Internet Telephony Protocol", 2004, https://arxiv.org/ftp/cs/papers/0412/0412017.pdf |

| [Sar06] | Ernesto Van der Sar, "BitTorrent: The 'one-third of all Internet traffic' Myth", 2006, https://torrentfreak.com/bittorrent-the-one-third-of-all-internet-traffic-myth/ |

| [Bailey17] | Jonathan Bailey, "The Long, Slow Decline of BitTorrent", 2017 https://www.plagiarismtoday.com/2017/06/01/the-long-slow-decline-of-bittorrent/ |

| [Singel11] | Ryan Singel, "Netflix Beats BitTorrent's Bandwidth", 2011 https://www.wired.com/2011/05/netflix-traffic/ |

| [Kraw13] | Konrad Krawczyk, "BitTorrent traffic drops to 7 percent in US, but rises everywhere else", 2013 https://www.digitaltrends.com/computing/bittorrent-file-sharing-p2p-download-upload-downloading-uploading/ |

| [Smith16] | Chris Smith, "Netflix isn't just hurting pay TV, it's also slowly killing online piracy", 2013 https://bgr.com/entertainment/netflix-downloads-bittorrent-piracy/ |

| [Kisembe17] | Phillip Kisembe, Wilson Jeberson, "Future of Peer-to-Peer Technology with the Rise of Cloud Computing", 2017, https://www.researchgate.net/publication/319579735_Future_of_Peer-To-Peer_Technology_with_the_Rise_of_Cloud_Computing |

| [COST21] | "Historical Cost of Computer Memory and Storage". Retrived November 2021, https://hblok.net/blog/posts/2017/12/17/historical-cost-of-computer-memory-and-storage-4/ |

| [Clark12] | Jack Clark, "P2P storage: Can it beat the odds and take on the cloud?"". 2012, https://www.zdnet.com/article/p2p-storage-can-it-beat-the-odds-and-take-on-the-cloud/ |

| [Yazti02] | Demetrios Zeinalipour-Yazti, "Exploiting the Security Weaknesses of the Gnutella Protocol", 2002, http://alumni.cs.ucr.edu/~csyiazti/courses/cs260-2/project/html/index.html |

| [Roettgers08] | Janko Roettgers, "5 Ways to Test if Your ISP Throttles P2P", 2008, https://gigaom.com/2008/04/02/5-ways-to-test-if-your-isp-throttles-p2p-2/ |

| [Kumb11] | Hareesh Kumbhinarasaiah, Manjaiah D.H., "Peer-to-Peer Live Streaming and Video On Demand Design Issues and its Challenges", 2011, https://www.researchgate.net/publication/51959957_Peer-to-Peer_Live_Streaming_and_Video_On_Demand_Design_Issues_and_itsChallenges |

| [Xu06] | D. Xu, S.S. Kulkarni, "Analysis of a CDN-P2P hybrid architecture for cost-effective streaming media distribution", Multimedia Systems, 2006, pp 383-399, DOI 10.1007/s00530-006-0015-3, https://link.springer.com/content/pdf/10.1007/s00530-006-0015-3.pdf |

| P2P | Peer-to-Peer |

| APARNET | Advanced Research Projects Agency Network |

| RFC | Request for Comments |

| TCP/IP | Transfer Control Protocol/Internetwork Protocol |

| HTTP | Hypertext Transfer Protocol |

| HTTP | HyperText Markup Language |

| CERN | European Organization for Nuclear Research |

| ISP | Internet Service Provider |

| CDN | Content Delivery Network |

| SDN | Software-defined Network |