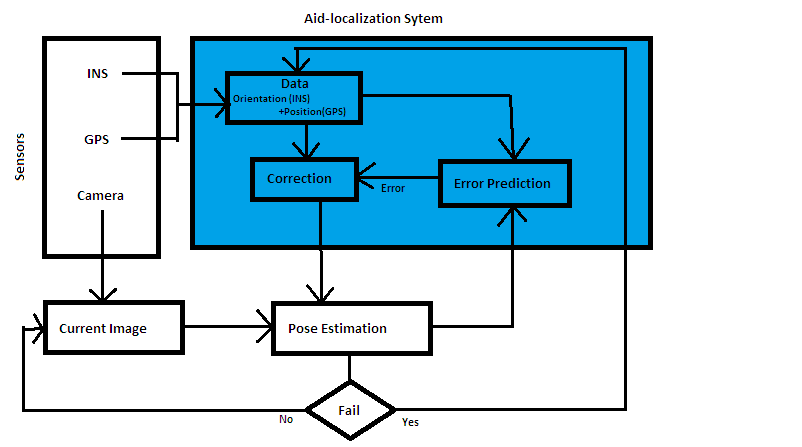

Figure 1:Hybrid Localization System

| Nathan Bodnar |

Mobile Based Augmented Reality is a new but quickly growing field of mobile applications. It allows integration of the information based virtual reality and the real world physical reality. This paper examines how this connection is made and what practical and enjoyable applications can be made from this technology.

Mobile Based Augmented Reality, Tracking, ARToolKit

With the advent of the iTunes application store for both the iPhone and iPod touch along with the release of Google's open source Android operating system for mobile devices has created a large spike in the public interest in in mobile applications.. This has inspired the creation of mobile applications in many different forms but one of the forms which has so far seemed the most promising is the implimentation of mobile based augmented reality applications. These application serve to combine virtual data in a interactive way with the physical reality of a mobile devices surroundings. This new technology has widespread applications from merely creating more interactive games to aiding in highly technical and complex building projects

Section 1 desribes briefly the background for mobile augmented reality which is non=mobile augmented reality.Section 2 describes various past techniques used for mobile augmented reality and the problems they had. Section 3 presents the more recent solutions for the issues in Section 2 Section 4 describes a few of the most recently developed applications for mobile based augmented reality. Finally, section 5 summarizes the topics covered in the rest of the paper.

The background of of mobile based augmented reality which is non-mobile augmented reality actually dates back all the way to the early 60's when the patent was awarded for Sensorama an environmental simulator which added its own visuals, sounds and smells to the natural world[Heilig62]. The first computer based application of the technology was Videoplace which allowed people to interact physically with a virtual environment[Krueger91]. Many other applications have been developed using augmented reality, which has come to defined as having three main components[Azuma97]

1.The combination of the real and virtual worlds so that real world actions have virtual world implications

2.Real time interactivity so that the virtual world adjusts pretty much instantly to you real world actions

3.The responses of the virtual world happen in 3D which is the one standard generally not implimented in mobile augmented reality

One of the biggest issues for augmented reality is the seemless combination of the real and virtual worlds for visual display and the tool which most commonly is used to facilitate this combination is the ARTToolKit. Created in 1999 by Hirokazau Kato, this kit is simply a basic library of computer vision tools which allow virtual images to be easily added to real world camera shots. The ARTToolKit allows the calculation of the real cameras place relative to the captured image which enables a virtual camera to be placed at the same spot in the virtual or augmented reality. The enable virtual objects to be easily added to the view of the real world [Kato99]

Back to Table of ContentsWhile most of the issues faced by mobile based augmented reality are not peculiar to its mobile nature there are two main issues which such mobile augmented reality must adress. These issues are Tracking the location of the user device and deciding where the calculations need to combine the virtual and real worlds will be done. Both of these issues has seen many different solutions throughout the course of the technological development of augmented reality. We will begin by looking at some of the older solutions to these problems.These solutions have issues which have been addressed in later solutions (which off course in turn created their own set of issues) allthough many of them are still commonly used today.

The basic difference between mobile and non-mobile augmented reality is off course mobility. While the ability to move while still maintaining the validity of the virtual and real world combination is very convenient, it provides the obvious challenge of having to continously determine exactly where a mobile device is at all times. Two basic solutions have been used historically to solve this problem.

Visual tracking uses landmarks and other characteristics of an image to figure out where in known space the camera (in this case the mobile device) which took the image is located. Given an image with a distinct enough object in it this sort of tracking can be very accurate. For example several iPhone apps allow you to take a picture of landmarks like the St. Louis Arch and send it to a service which figure out you have photographed the arch and then return general information about it. However this form of location finding had numerous downsides. First is it very computationally intensive, so much so that even with modern cellular devices with increased computing power this task is generally done off of the device. This prevents it from being a truly usable system if the position of the user device must be known accurately at all times. Secondly, it is very susceptible to external influence like lighting or other atmospheric affects. This means apps which depend upon it may not work if it is too sunny or to rainy which often limits this to only indoor use. The final issue is that this technology is limited to recognizing those landmarks which it has been programmed to recognize. That means it can only know where it is in a small area like a room where all objects contained have been registered or when it views any object which is famous enough to have been added to its databanks[Jun99]

Inertial tracking is the use of sensors to create of a Six Degrees of Freedom picture not of where the object is but where it has movie relative to to its previous location. The starting requirement of this system is an initial position which must be provided by some external method. Once given a starting point Inertial tracking uses motion sensors (Generally three accelerometers and three gyroscopes) to calculate amount the movement of a device both transitionally and rotationally. Combined with knowledge of the starting position this allows the calculation of the end position. This requires less recalculation than visual tracking since future measurements are based upon former calculations.However, the sensors must be precisely calibrated and frequently recalibrated or they will be inaccurate. Even tiny errors in accuracy can snowball because of the fact that each succesive calculation is based upon the last one. Because of this fact and the improbability of having no errors in calculation over any extensed period of time this method is inherently less accurate than visual tracking although it is much faster[Lang02].

Thin shell computing is simply the execution of calculations on an external server rather than on a mobile device itself. This is popular in early mobile augmented reality because it allows the computational load which is often very intensive to be shifted off of the device which often has severely limited computational power. This solution to the problem off course comes with its own inherent tradeoff of less speed and efficiency for the calculation and creation of the augmented reality.

Modern work in Augmented Reality has faced the same basic issues as always with its expansion to mobile devices and has managed to come up with some very innovational solutions to these problems. These solutions have not acted as replacements -at least not completely- for the previously discussed methods of tracking and computing but they do provide better alternatives to the solutions when applied in the right situation

The main issue created by the problem of tracking location in mobile augmented reality is largely a balancing game between the accuracy of the positioning and the amount of computational power it requiures to impliment such a system. Solutions to the problem must necesarily lean in on way or another based upon the situation for which the tracking system must be implimented

Figure 1:Hybrid Localization System

One example of a tracking system which has chosen to embrace accuracy over speed or ease of computability is the Hybrid Localization System (HLS). This system is for the most part just an implimentation of vision based tracking but it has one major improvement. As previously noted one of the major problems with vision=based tracking is the difficulty posed in outdoor scenarios by the differing atmospheric factors like lighting or weather. This system attempts to avoid this issue by implementing both a visual-based tracking system and a correctional system called Aid-localization[Zendjebil08]

The idea behind HLS is that for the most part visual tracking is the best way to determine location for moblie augmented reality but it does make mistakes especially in outdoor situations and when it does Aid-Localization can catch and correct these errors.The system strives not just to correct the errors of visual tracking but to make sure that at no point does the system can function even if any one of its sensors have been compromised. [Zendjebil08]

Aid-Localization is made up of two components a GPS (Global Positioning system) and an inertial sensor (INS). The GPS serves to provide an exact location of the mobile device while the inertial sensor allows an estimate to be made about the orientation of the camera [Zendjebil08]

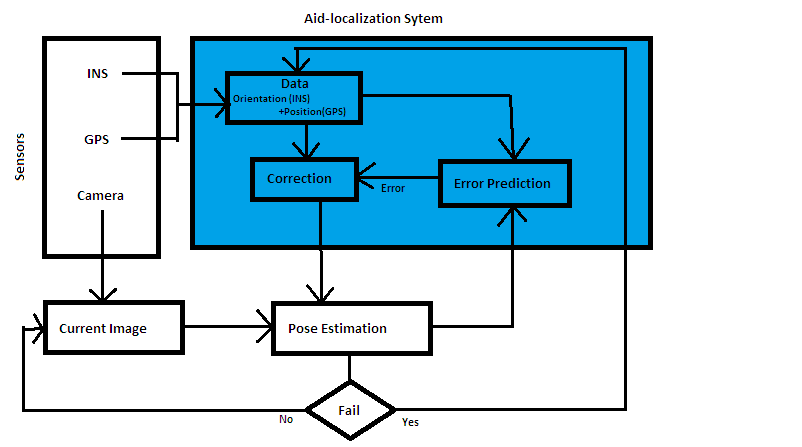

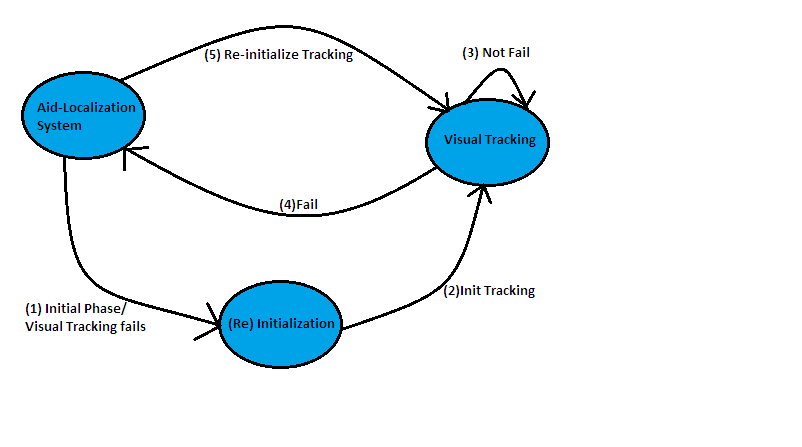

Figure 2:Hybrid Localization State Machine

As demonstrated in figures 1 and 2. HLS follows a very simple pattern. The initial location of a mobile device is determined by the aid-localization system. This initial location is carried to the visual locator which checks this locations validity and then is used continously to estimate pose until such time as it fails to provide a correct estimate. Then its estimate is relayed to the aid-localization subsystem which calculates the difference between its won calculation of location uses this as a correcting factor and relays the corrected location back to the Visual Tracker which continues to calculate location until it is fails again [Zendjebil08]

While it might seem that it is always a good idea to have as complete a picture as possible of where a mobile device is and what it is looking at when creating an augmented reality this is not always the case and because of the ability to significantly reduce computation time many tracking methods try to eliminate as much info gather as they can[Xing09]

A prototypical example of such a method is Sensor Net Tracking (SNT) which requires only the ability to determine the strength of incoming signal to provide the needed augmented reality content

[Xing09]SNT is set up to work in an environment where every item which has information that needs to accessed in the augmented world has an RFID (Radio Frequency Identification) chip implanted in it. Any mobile device equiped for SNT will have the ability to assess the strength of these symbols. When the device has determined the strongest symbol it will access the info carried by that symbol which will be a set of links to online site such as Wikipedia, Twitter or whatever site was relevant to the object.The person could then choose which sites to access or have all the sites automatically opened by the mobile device. As a person moves closer to an object of interest the signal of its RFID chip will become the strongest allowing the material of interest to that person to appear on their mobile device [Xing09]

Obviously this is a much simplified version of augmented reality but it a great example of the move seen across the field of mobile augmented reality to reduce computation by only providing the features needed for a given objective and no more

As time has progressed the capacity ofa cellular phones to do computation has greatly increased and worries over where to do calculating for such large applications such such as augmented reality has really become a mute point. While some applications still choose to outsource their computations it is really no longer necessary. Almost all computations can be performed on the mobile device itself. Off course not all people own phones of the quality of the iPhone or Droid so some applications are still limited to running computations on external servers.

Back to Table of ContentsLike all technology MBAR is designed for application and a great deal of the recent work with this technology has been in creating new applications. This technology is incredibly versatile with applications from complicated assembly aid to advanced consumer content.

One the of the more commercial applications of mobile augmented reality is the idea of enhanced advertisement. The application would be based upon visual localization. Basically the user would aim their mobile phone at the barcode on any item in a store and take a picture. The picture would be analyzed using computer vision techniques and the mobile phone would then display 3 dimensional avatars on top of the mobile phones image of the object scanned. These avatars would represent the various types of people from sale associates to previous customers who had information to share about the item in question. Specific avatars could be selected an communicated with via voice commands. They would respond with text boxes. This would allow a user to investigate all the facets of a product before making a sale but would also allow the product to sell itself in a way not possible before making it a help for both the consumer and the producer[Guven09]

Interactive games between mobile phones are nothing new in the application world but Augmented Reality in conjunction with interactivity provides a whole new world of possible games. One example which has been recently created is a tennis game which can be either interactive or a solo game. The game is triggered by pointing a game equiped mobile phone at one of the random trigger shapes defined in the ARTToolKit. If multiple users are within blue tooth range of each other when they start the game they are allowed to connect via a simple peer to peer communications layer with one phone acting as the server and the other as a client. As long as both phones remain focused on the ARTToolKit image they will both have a virtual tennis court projected over onto their screen. The server holds the ball at first and can release it by hitting the '2' key from then on game play continues as it would in tennis with user volleying the ball by moving the device in front of the ball as it is projected on their screen. A simple physics engine along with the ARTToolKit based calculation of where the phone is in virtual space allow the game to be played without any button controls needed[Henrysson05].

Many applications exist which apply MBAR to games or recreational pursuits but this is no the exclusive domain of such technology. One of the first major uses of non-mobile augmented reality was developed by L. C. Rosenberg for the Air Force to aid in construction of planes. Well mobile augmented reality has not strayed to far from this root and is used to provide visual help via mobile phone to people completeing complicated mechanical tasks. The process works as a series of images sent back and forth between the mobile user and a central server. With many modern phones the work could be done solely on the mobile phone side but this application does not do so. The process begins when and if a person finds that they are having trouble with some complex mechanical job. The person needs only to take a picture of the object being worked upon and a description of the job to be completed as a picture file to the server. The server then acts to analyze where the machine is in the process of the descrived job. Once it has figured this out the server gives the user an option of wether they want to move a back a step, see a new view of the current step, or see the next step to be completed. It sends a animation to show whichever one the user wants and waits. When the user wants to continue to the next step it sends a new picture of the object being worked on and the steps continue this way until the job is completed[Haikkarainen08].

Back to Table of ContentsMobile Based Augmented Reality is a rapidly growing field which shows a lot of promise for supporting both fun and usefull tools. It's major challenge at the moment is to provide its users with as much functionality as possible without overloading the still relatively small computational power of most mobile devices. This balance is made much easier by the recent increase in mobile computing power but is still one that should be paid attention to so that such applications don't proclude the use of multiple applications at a time. The field is still growing but there are already many exiting examples of how this technology can be applied

Back to Table of Contents

[Azuma97]"Azuma,Ronald. A Survey of Augmented Reality Presence: Teleoperators and Virtual Environments, pp. 355–385, August 1997."http://www.cs.unc.edu/~azuma/ARpresence.pdf

A survey of augmented reality in 1997

[Guven09]"Guven, S.; Oda, O.; Podlaseck, M.; Stavropoulos, H.; Kolluri, S.; Pingali, G.; , "Social mobile Augmented Reality for retail," Pervasive Computing and Communications, 2009. PerCom 2009. IEEE International Conference on , vol., no., pp.1-3, 9-13 March 2009, http://ieeexplore.ieee.org/document/4912803/

Describes application of mobile augmented reality for a retail setting.

[Hakkarainen08]"Hakkarainen, M.; Woodward, C.; Billinghurst, M.; , "Augmented assembly using a mobile phone," Mixed and Augmented Reality, 2008. ISMAR 2008. 7th IEEE/ACM International Symposium on , vol., no., pp.167-168, 15-18 Sept. 2008" http://ieeexplore.ieee.org/document/4637349/

Describes application of mobile augmented reality for complex assembly jobs.

[Heilig62]"Heilig, Morton. Sensorama Simulator. United States Patent Office, assignee. Patent 3050870. 28 Aug. 1962. Print."

Patent for first augmented reality device

[Henrysson05]"Henrysson, A.; Billinghurst, M.; Ollila, M.; , "Face to face collaborative AR on mobile phones," Mixed and Augmented Reality, 2005. Proceedings. Fourth IEEE and ACM International Symposium on , vol., no., pp. 80- 89, 5-8 Oct. 2005" http://ieeexplore.ieee.org/document/1544667/

Describes application of mobile augmented reality for complex assembly jobs.

[Jun99]"Jun Park; Bolan Jiang; Neumann, U.; , "Vision-based pose computation: robust and accurate augmented reality tracking," Augmented Reality, 1999. (IWAR '99) Proceedings. 2nd IEEE and ACM International Workshop on , vol., no., pp.3-12, 1999

doi: 10.1109/IWAR.1999.803801" http://www.ieeexplore.ieee.org/document/803801/

Describes vision backed tracking.

[Kato99]"Kato, H., Billinghurst, M. "Marker tracking and hmd calibration for a video-based augmented reality conferencing system.",In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality (IWAR 99), October 1999."

Describes ARToolKit.

[Krueger91]"Krueger, Myron W. Artificial Reality. Reading, Mass.: Addison-Wesley, 1991. Print"

Book about start of computer virtual reality .

[Lang02]"Lang, P.; Kusej, A.; Pinz, A.; Brasseur, G.; , "Inertial tracking for mobile augmented reality," Instrumentation and Measurement Technology Conference, 2002. IMTC/2002. Proceedings of the 19th IEEE , vol.2, no., pp. 1583- 1587 vol.2, 2002" http://ieeexplore.ieee.org/document/1007196/

Describes inertial tracking.

[Xing09]"Xing Liu; Alpcan, T.; Bauckhage, C.; , "Adaptive wireless services for augmented environments," Mobile and Ubiquitous Systems: Networking & Services, MobiQuitous, 2009. MobiQuitous '09. 6th Annual International , vol., no., pp.1-8, 13-16 July 2009" http://ieeexplore.ieee.org/document/5326401/

Describes sensor net tracking.

[Zendjebil08] "Zendjebil, I.M.; Ababsa, F.; Didier, J.-Y.; Mallem, M.; , "Hybrid Localization System for Mobile Outdoor Augmented Reality Applications," Image Processing Theory, Tools and Applications, 2008. IPTA 2008. First Workshops on , vol., no., pp.1-6, 23-26 Nov. 2008" http://ieeexplore.ieee.org/document/4743733/

Presents a hybrid scheme for object localization.

| 6DoF | Six Degrees of Freedom |

| AL | Aid-Localization |

| GPS | Global Positioning System |

| HLS | Hybrid Localization System |

| INS | Inertial Sensor |

| MBAR | Mobile Based Augmented Reality |

| RFID | Radio Frequency Identification |

| SNT | Sensor Net Tracking |

Last Modified: April, 2010